Devlog: Mobile touch controls from scratch in HTML5

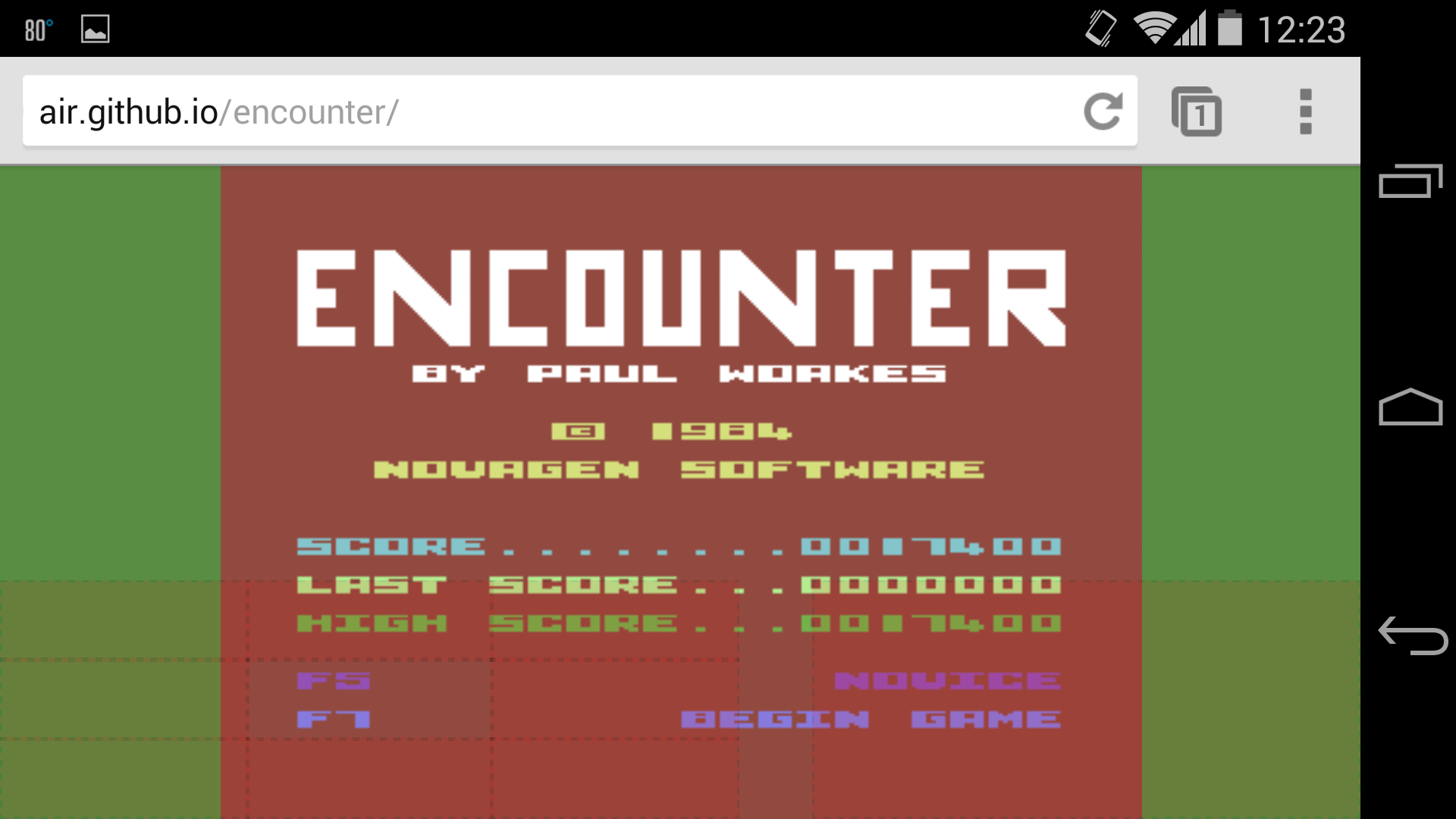

First up, grab a WebGL-capable mobile device - I recommend a Nexus - and play the current build to see what I’m talking about.

What kind of touch controls do we want for Encounter?

It’s possible to get quite sophisticated analog controls on a phone. Xibalba is a good example in the first person shooter category. You can choose to turn either a little, or a lot by sweeping your thumb on the screen.

For C64 games though we’re emulating the simple 8-way controls of a joystick, so complex analog turning isn’t appropriate. Think of this bad boy:

I had a few goals in mind for the controls.

- Don’t pursue the joystick metaphor. Ditch it and go NES-style: Provide a simple 8-way directional pad (d-pad), and a single fire button.

- Make it visually clear where the active zones are for touching. No guessing should be required.

- Make the virtual d-pad act like a physical one as much as possible. This includes means being able to smoothly transition from one position to another without lifting your thumb.

- Don’t rely on frameworks. Implement from native JavaScript. It would have been easy to pick up something like hammer.js, but where’s the learning in that?

For reference, all my code is here in Touch.js.

1. Get visual

Surprisingly for a first person game, I currently optimise for portrait orientation. The extra space at the bottom of the screen is ideal for the all-important radar.

Show the buttons

Buttons are just rectangles overlaid on top of the display.

To make the touch zones clear I picked a red colour with reasonable contrast against a green background - the colour of level one - and made it 90% transparent. A dotted line to highlight the edges and we’re done. This is all done in straight CSS:

Touch.CONTROLS_CSS_NOFILL = 'opacity:0.1; z-index: 11000; border-style: dashed; border-width: 1px';

Touch.CONTROLS_CSS = 'background-color: red; ' + Touch.CONTROLS_CSS_NOFILL;

Use percentages

Rather than use fixed sizes for the virtual buttons - 50px high or whatever - I define them as percentages of the screen size. This gives us a fair shot at supporting all resolutions and orientations. Writing special cases for a zillion devices isn’t cool.

Given the portrait style these numbers work well:

Touch.DPAD_BUTTON_WIDTH_PERCENT = 18;

Touch.DPAD_BUTTON_HEIGHT_PERCENT = 12;

We’re literally just saying a d-pad button width is 18% of the screen. It looks like this (d-pad is at bottom left):

This is how it turns out in landscape on the Nexus 5 - the red clashes a little here but hopefully you get the idea:

There’s definitely scope to adjust this further, but good enough for now.

The dead zone

Notice we explicitly put a dead zone in the middle of the d-pad. We need a place for the thumb to slide when no movement inputs are needed; the thumb shouldn’t have to leave the screen at any time.

2. It’s all about events

JavaScript provides a solid Touch API. When touches occur, they’re given to you as events occurring against the relevant DOM element. The hard work of tracking multiple fingers moving around is done for you.

So you can define a <div> for a button, place and colour it on the screen, and attach a touch handler. A touch on the button will trigger the handler, and touches against the background will not. Easy!

All the other considerations - e.g. size and placement of the button - become pure CSS issues. We’re just manipulating a CSS div.

The bible for the Touch API is the Mozilla reference doc. It’s worth reading, but you won’t get the whole picture until you’ve wired up some buttons and observed what happens in practice.

In the real world, the events you worry about are:

touchstart // finger went down on this element

touchend // finger left the element

touchmove // finger is moving on the screen

I never saw touchleave or touchcancel in my testing.

So the naive implementation for a button is just this:

button.addEventListener('touchstart', function(event) {

// change the state of the controls, e.g. start shooting

});

// do this again for touchend and reverse the state. Done!

Hooking up the controls

All buttons should work in the same way. The only thing that differs is which bits of state we twiddle when they’re pressed.

So I have a generic factory function createDPadButton that does the donkey work of creating a div and attaching handlers. You pass in a couple of extra functions to define what should happen when the button is pressed, and unpressed. Unpressed is totally a word.

// pass our button id, along with our press() and unpress() functions.

Touch.dpad['upleft'] = Touch.createDPadButton('upleft', function() {

Controls.current.moveForward = true;

Controls.current.turnLeft = true;

}, function() {

Controls.current.moveForward = false;

Controls.current.turnLeft = false;

});

This gets us a functional button, and all that’s left is to move it into position, above two other buttons in this case:

Touch.dpad['upleft'].style.bottom = (Touch.DPAD_BUTTON_HEIGHT_PERCENT * 2) + '%';

2. Polish and playability

The approach above gives you functional controls, but they don’t feel good. You have to peck at the buttons to get them to work. We want a single constant touch to work as you slide it around. Should be easy right?

Smooth transitions

The Touch event system gets really interesting - i.e. unintuitive - when you start sliding your finger around.

Once you put your finger down on button foo, foo will receive all events for that finger until you lift it off the glass. Even if we move off the button itself and slide across a bunch of other perfectly good buttons, greedy old foo will receive all the events.

So a touchmove event does not tell you which button is being pressed. We need something like this pseudocode:

- A touch is in progress and button foo got a

touchmove. This tells us that - The touch started on foo, and

- The finger is moving.

- Is the finger actually on foo right now? If so, nothing interesting has happened. The user is making small dragging motions within the bounds of a single button.

- Is the finger actually on button bar? If so, the user has moved to another button. We need to unpress foo, and press bar. Regardless, all events will still be sent to foo. Totally clear.

- Is the finger actually nowhere on any of our buttons? In this case, the finger is either in the dead zone (no problem), or is flailing around the screen somewhere (get a bigger device bro). Unpress everything and wait for the drag to come back somewhere useful.

You can see the above implemented in Touch.js in the handler beginning button.addEventListener('touchmove'....

Two bits of magic are required to make this work:

- We need to be able to tell where the finger is right now, and the event only tells us indirectly.

- The most useful clue came from this answer on stackoverflow, Crossing over to new elements during touchmove.

- We can use Document.getElementFromPoint which will translate an X,Y coordinate - usefully supplied in the event - into a DOM element. It’s not guaranteed on all platforms, but it works on the ones I care about (sorry IE).

- We need to keep track of which button is actively being pressed. In the code this is

Touch.lastDPadPressed.

The finished touchmove logic is relatively simple but it took a great deal of experimentation to see how the events actually behave.

Unwanted events: Screen dragging

Another issue to look out for is drag behaviour on your background. If the browser believes that your canvas doesn’t match the window size exactly, the user will be able to drag your display around. It’s distracting.

If like me your canvas isn’t quite absolutely perfect, work around the issue by consuming all touchmove events that occur outside of your buttons:

// disable dragging

// FIXME this shouldn't be needed but we go a few px off bottom of screen, which enables drag

document.addEventListener('touchmove', function(event) {

event.preventDefault();

});

3. Platform detection

If you play on the desktop, you don’t want to see the red buttons. How do you know from JavaScript if you’re on a touch device?

This is tougher than it looks. The best effort I have so far is:

UTIL.platformSupportsTouch = function()

{

// does the window object have a function named ontouchstart?

return 'ontouchstart' in window;

};

This worked well until a recent update of Windows 8 - I assume in support of Surface devices. Even though my Windows 8 desktop PC has no support for touch, the OS fakes it anyway and we get a false positive.

I notice the Ghost blogging platform - powering this page - suffers from the same bug: the editor thinks I’m on mobile when I use Windows 8.

Detecting touch reliably seems a bit of a mess judging from stackoverflow - let me know if you know a sure method.

Last tip: Chrome on the desktop has a neat ability to emulate touchscreen devices. It’s not perfect but it saves a bunch of time on layout issues.

Happy touching!

Appendix: Use the code

The simplest way to get this code running:

- Get the code from github.

-

Create a new HTML file with:

<html><body> <script src="js/Touch.js"></script> <script src="js/UTIL.js"></script> <script> Touch.init(); </script> </body></html> - Load this on a device with touch enabled - or using emulation in Chrome - to see pressable buttons appear on the page.

Notice, nothing will happen on a desktop browser, because of these lines in Touch.js:

// don't init touch on desktops

if (!UTIL.platformSupportsTouch())

{

return;

}